This is “The Internet and Social Media”, chapter 11 from the book Culture and Media (v. 1.0). For details on it (including licensing), click here.

For more information on the source of this book, or why it is available for free, please see the project's home page. You can browse or download additional books there. To download a .zip file containing this book to use offline, simply click here.

Chapter 11 The Internet and Social Media

Cleaning Up Your Online Act

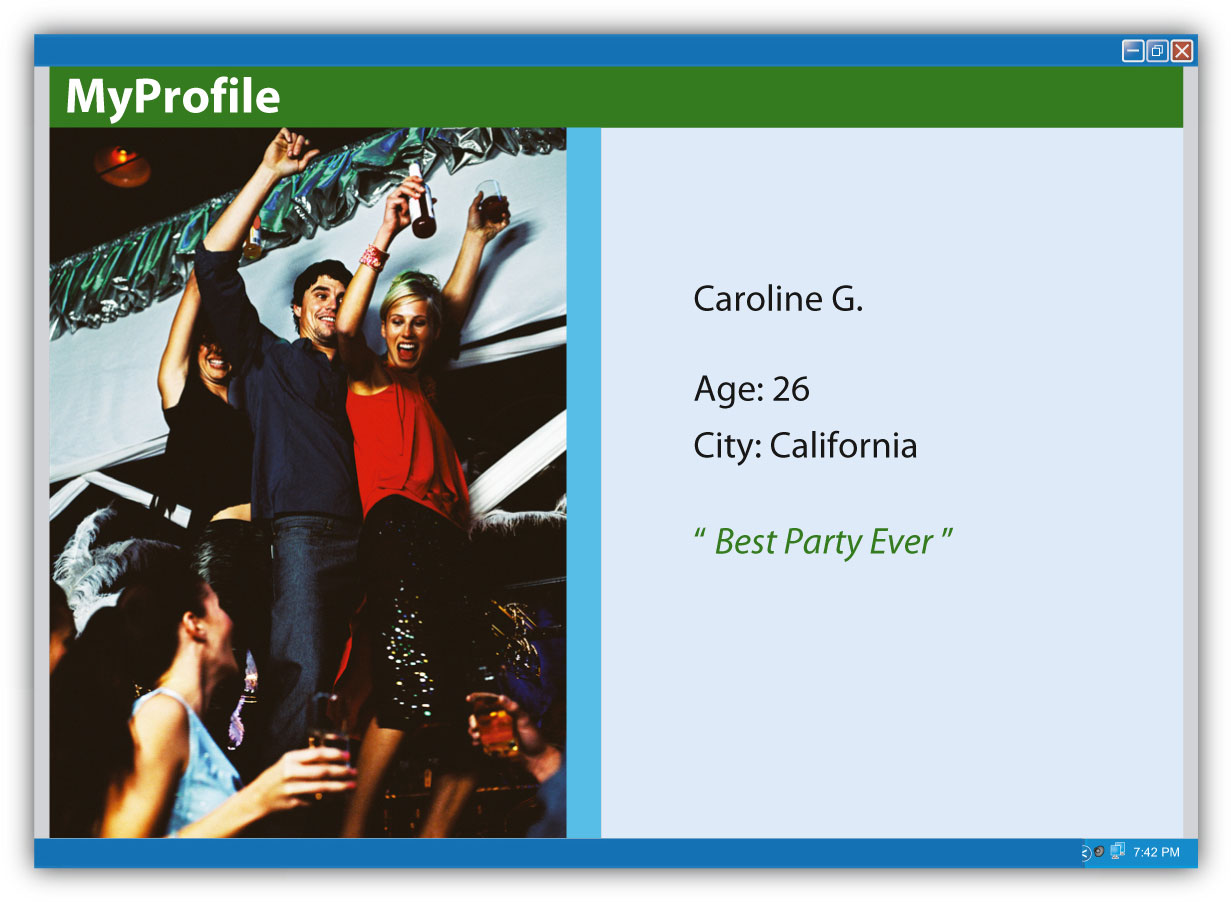

Figure 11.1

It used to be that applying for a job was fairly simple: send in a resume, write a cover letter, and call a few references to make sure they will say positive things. The hiring manager understands that this is a biased view, designed to make the applicant look good, but that is all forgivable. After all, everyone applying for a particular job is going through this same process, and barring great disasters, the chances of something particularly negative reaching the desk of a hiring manager are not that great.

However, there is a new step that is now an integral part of this application process—hiding (or at least cleaning up) the applicants’ virtual selves. This could entail “Googling”—shorthand for searching on Google—their own name to see the search results. If the first thing that comes up is a Flickr album (an online photo album from the photo-sharing site Flickr) from last month’s Olympian-themed cocktail party, it may be a good idea to make that album private to ensure that only friends can view the album.

The ubiquity of Web 2.0 social media like Facebook and Twitter allows anyone to easily start developing an online persona from as early as birth (depending on the openness of one’s parents)—and although this online persona may not accurately reflect the individual, it may be one of the first things a stranger sees. Those online photos may not look bad to friends and family, but one’s online persona may be a hiring manager’s first impression of a prospective employee. Someone in charge of hiring could search the Internet for information on potential new hires even before calling references.

First impressions are an important thing to keep in mind when making an online persona professionally acceptable. Your presence online can be the equivalent of your first words to a brand-new acquaintance. Instead of showing a complete stranger your pictures from a recent party, it might be a better idea to hide those pictures and replace them with a well-written blog—or a professional-looking website.

The content on social networking sites like Facebook, where people use the Internet to meet new people and maintain old friendships, is nearly indestructible and may not actually belong to the user. In 2008, as Facebook was quickly gaining momentum, The New York Times ran an article, “How Sticky Is Membership on Facebook? Just Try Breaking Free”—a title that seems at once like a warning and a big-brother taunt. The website does allow the option of deactivating one’s account, but “Facebook servers keep copies of the information in those accounts indefinitely.”Maria Aspan, “How Sticky Is Membership on Facebook? Just Try Breaking Free,” New York Times, February 11, 2008, http://www.nytimes.com/2008/02/11/technology/11facebook.html. It is a double-edged sword: On one hand, users who become disillusioned and quit Facebook can come back at any time and resume their activity; on the other, one’s information is never fully deleted. If a job application might be compromised by the presence of a Facebook profile, clearing the slate is possible, albeit with some hard labor. The user must delete, item by item, every individual wall post, every group membership, every photo, and everything else.

Not all social networks are like this—MySpace and Friendster still require users who want to delete their accounts to confirm this several times, but they offer a clear-cut “delete” option—but the sticky nature of Facebook information is nothing new.Ibid. Google even keeps a cache of deleted web pages, and the Internet Archive keeps decades-old historical records. This transition from ephemeral media—TV and radio, practically over as quickly as they are broadcast—to the enduring permanence of the Internet may seem strange, but in some ways it is built into the very structure of the system. Understanding how the Internet was conceived may help elucidate the ways in which the Internet functions today—from the difficulties of deleting an online persona to the speedy and near-universal access to the world’s information.

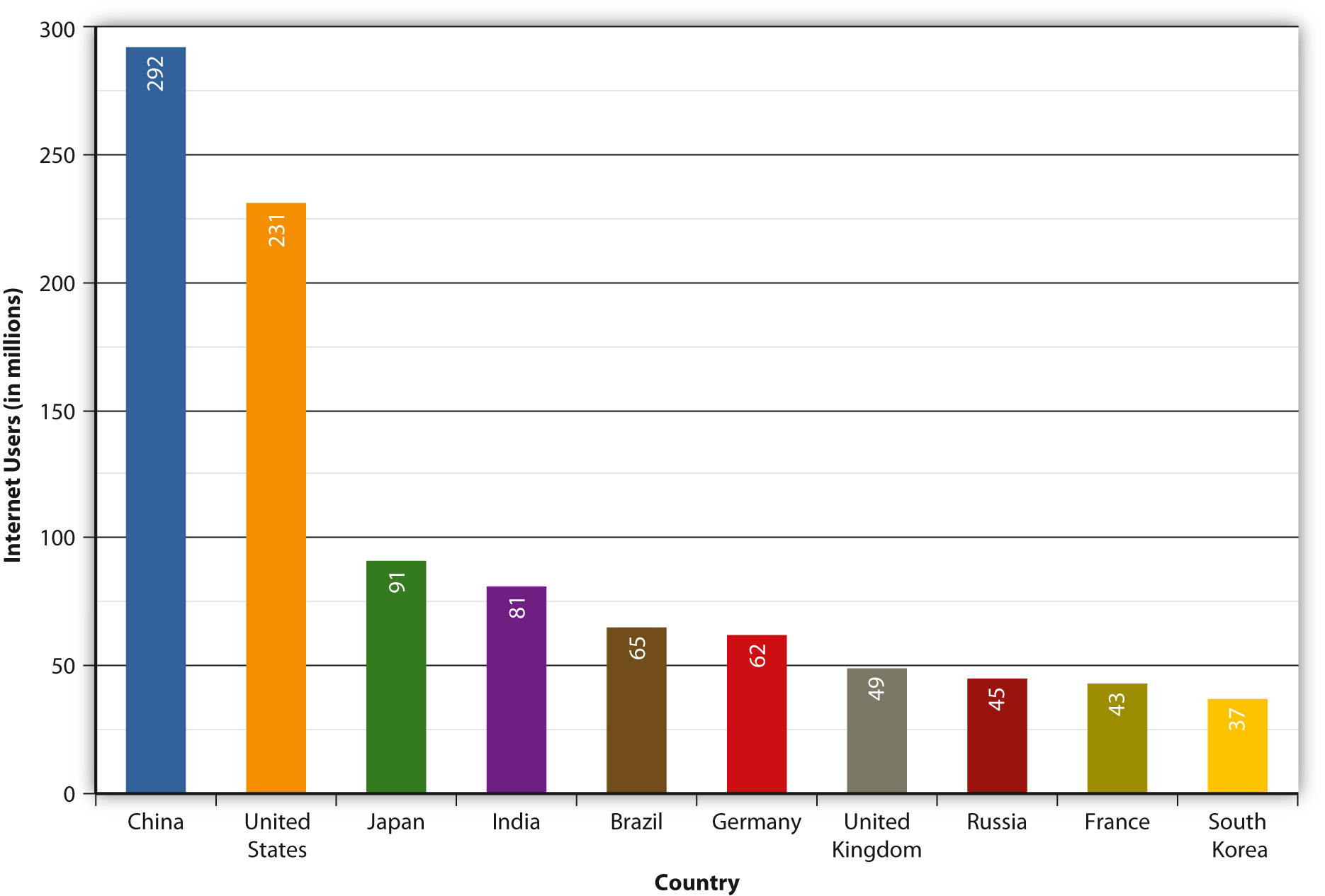

11.1 The Evolution of the Internet

Learning Objectives

- Define protocol and decentralization as they relate to the early Internet.

- Identify technologies that made the Internet accessible.

- Explain the causes and effects of the dot-com boom and crash.

From its early days as a military-only network to its current status as one of the developed world’s primary sources of information and communication, the InternetA web of interconnected computers and all of the information publicly available on these computers. has come a long way in a short period of time. Yet there are a few elements that have stayed constant and that provide a coherent thread for examining the origins of the now-pervasive medium. The first is the persistence of the Internet—its Cold War beginnings necessarily influencing its design as a decentralized, indestructible communication network.

The second element is the development of rules of communication for computers that enable the machines to turn raw data into useful information. These rules, or protocolsAn agreed-upon set of rules for two or more entities to communicate over a network., have been developed through consensus by computer scientists to facilitate and control online communication and have shaped the way the Internet works. Facebook is a simple example of a protocol: Users can easily communicate with one another, but only through acceptance of protocols that include wall posts, comments, and messages. Facebook’s protocols make communication possible and control that communication.

These two elements connect the Internet’s origins to its present-day incarnation. Keeping them in mind as you read will help you comprehend the history of the Internet, from the Cold War to the Facebook era.

The History of the Internet

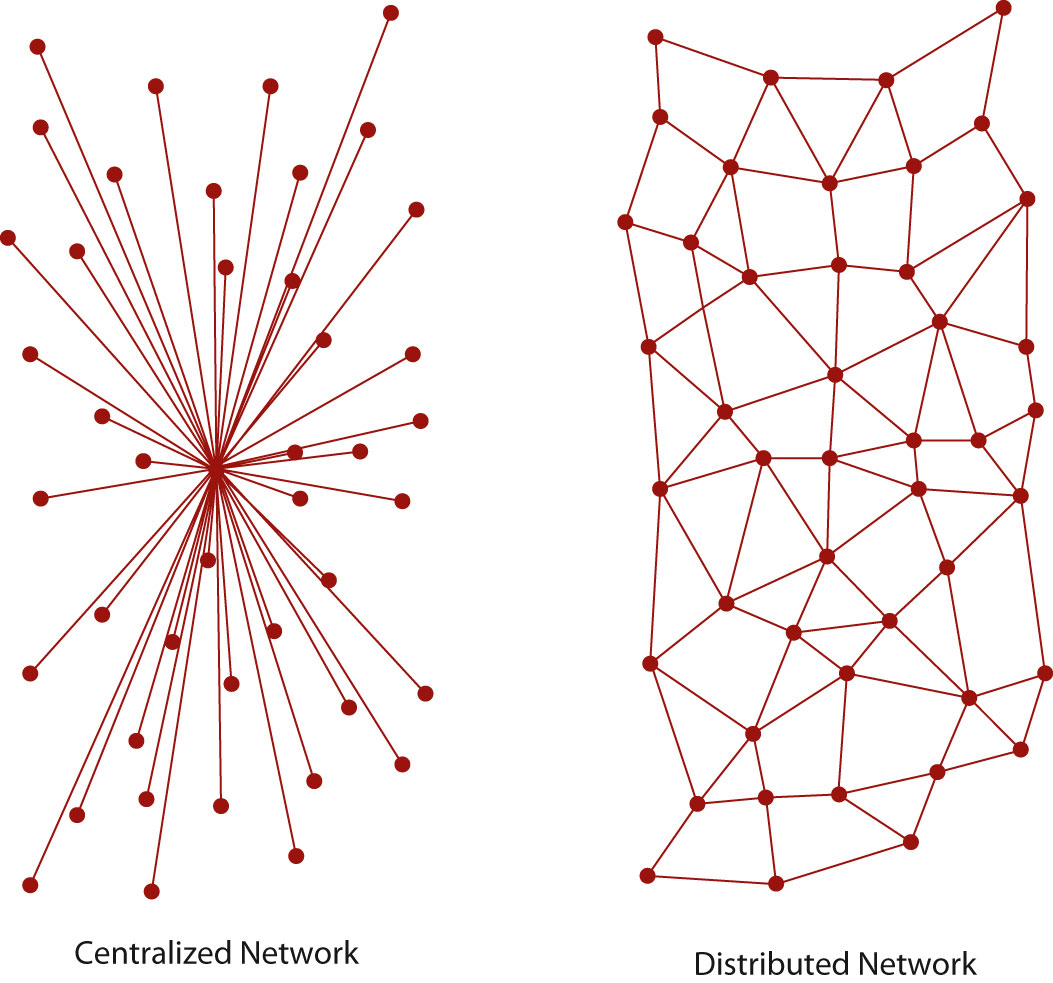

The near indestructibility of information on the Internet derives from a military principle used in secure voice transmission: decentralizationThe principle that there should be no central hub that controls information flow. Instead, information is transferred via protocols that allow any computer to communicate directly with any other computer.. In the early 1970s, the RAND Corporation developed a technology (later called “packet switching”) that allowed users to send secure voice messages. In contrast to a system known as the hub-and-spoke model, where the telephone operator (the “hub”) would patch two people (the “spokes”) through directly, this new system allowed for a voice message to be sent through an entire network, or web, of carrier lines, without the need to travel through a central hub, allowing for many different possible paths to the destination.

During the Cold War, the U.S. military was concerned about a nuclear attack destroying the hub in its hub-and-spoke model; with this new web-like model, a secure voice transmission would be more likely to endure a large-scale attack. A web of data pathways would still be able to transmit secure voice “packets,” even if a few of the nodes—places where the web of connections intersected—were destroyed. Only through the destruction of all the nodes in the web could the data traveling along it be completely wiped out—an unlikely event in the case of a highly decentralized network.

This decentralized network could only function through common communication protocols. Just as we use certain protocols when communicating over a telephone—“hello,” “goodbye,” and “hold on for a minute” are three examples—any sort of machine-to-machine communication must also use protocols. These protocols constitute a shared language enabling computers to understand each other clearly and easily.

The Building Blocks of the Internet

In 1973, the U.S. Defense Advanced Research Projects Agency (DARPA) began research on protocols to allow computers to communicate over a distributed networkA web of computers connected to one another, allowing intercomputer communication.. This work paralleled work done by the RAND Corporation, particularly in the realm of a web-based network model of communication. Instead of using electronic signals to send an unending stream of ones and zeros over a line (the equivalent of a direct voice connection), DARPA used this new packet-switching technology to send small bundles of data. This way, a message that would have been an unbroken stream of binary data—extremely vulnerable to errors and corruption—could be packaged as only a few hundred numbers.

Figure 11.2

Centralized versus distributed communication networks

Imagine a telephone conversation in which any static in the signal would make the message incomprehensible. Whereas humans can infer meaning from “Meet me [static] the restaurant at 8:30” (we replace the static with the word at), computers do not necessarily have that logical linguistic capability. To a computer, this constant stream of data is incomplete—or “corrupted,” in technological terminology—and confusing. Considering the susceptibility of electronic communication to noise or other forms of disruption, it would seem like computer-to-computer transmission would be nearly impossible.

However, the packets in this packet-switching technology have something that allows the receiving computer to make sure the packet has arrived uncorrupted. Because of this new technology and the shared protocols that made computer-to-computer transmission possible, a single large message could be broken into many pieces and sent through an entire web of connections, speeding up transmission and making that transmission more secure.

One of the necessary parts of a network is a host. A host is a physical node that is directly connected to the Internet and “directs traffic” by routing packets of data to and from other computers connected to it. In a normal network, a specific computer is usually not directly connected to the Internet; it is connected through a host. A host in this case is identified by an Internet protocol, or IP, address (a concept that is explained in greater detail later). Each unique IP address refers to a single location on the global Internet, but that IP address can serve as a gateway for many different computers. For example, a college campus may have one global IP address for all of its students’ computers, and each student’s computer might then have its own local IP address on the school’s network. This nested structure allows billions of different global hosts, each with any number of computers connected within their internal networks. Think of a campus postal system: All students share the same global address (1000 College Drive, Anywhere, VT 08759, for example), but they each have an internal mailbox within that system.

The early Internet was called ARPANET, after the U.S. Advanced Research Projects Agency (which added “Defense” to its name and became DARPA in 1973), and consisted of just four hosts: UCLA, Stanford, UC Santa Barbara, and the University of Utah. Now there are over half a million hosts, and each of those hosts likely serves thousands of people.Central Intelligence Agency, “Country Comparison: Internet Hosts,” World Factbook, https://www.cia.gov/library/publications/the-world-factbook/rankorder/2184rank.html. Each host uses protocols to connect to an ever-growing network of computers. Because of this, the Internet does not exist in any one place in particular; rather, it is the name we give to the huge network of interconnected computers that collectively form the entity that we think of as the Internet. The Internet is not a physical structure; it is the protocols that make this communication possible.

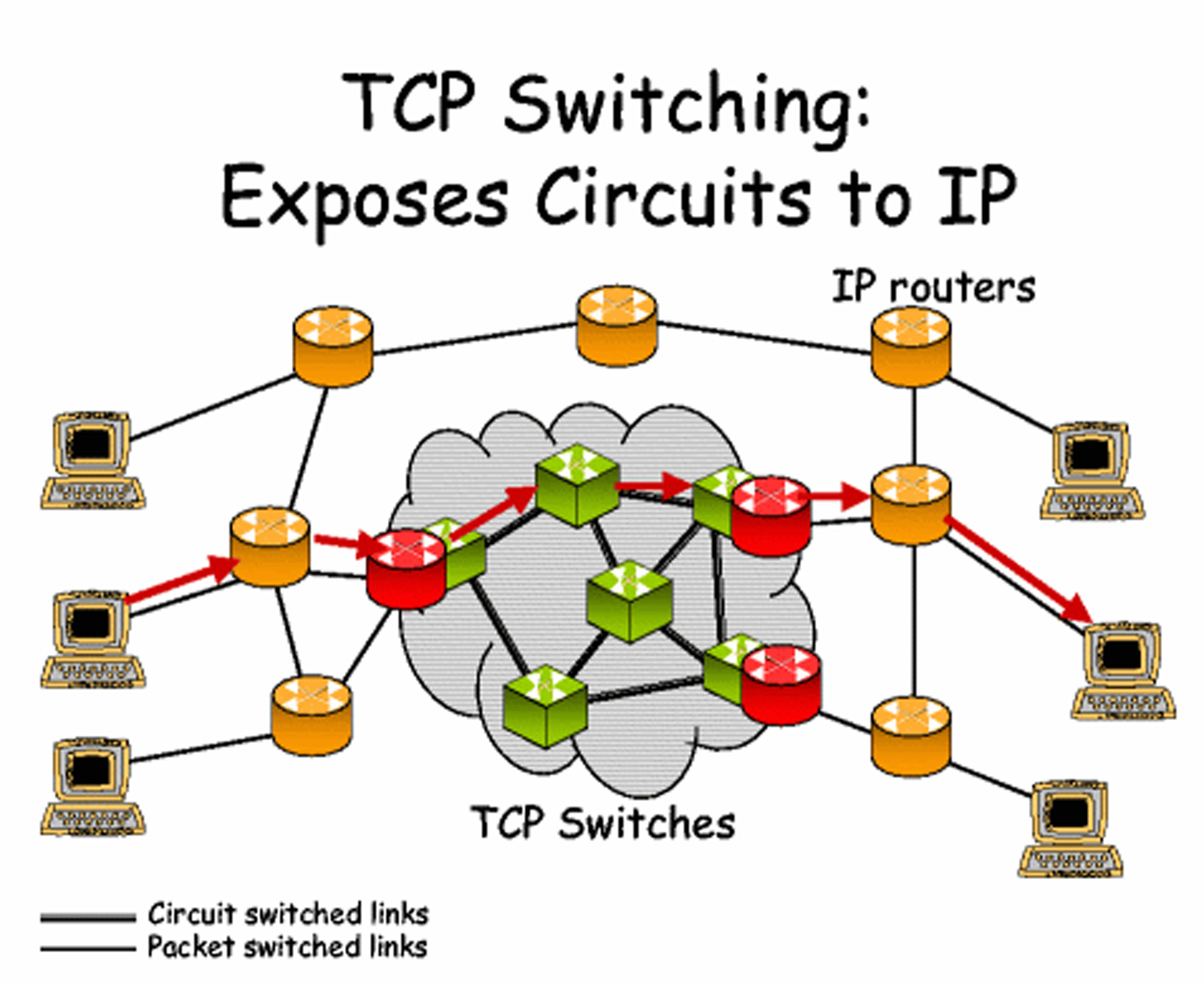

Figure 11.3

A TCP gateway is like a post office because of the way that it directs information to the correct location.

One of the other core components of the Internet is the Transmission Control Protocol (TCP) gateway. Proposed in a 1974 paper, the TCP gateway acts “like a postal service.”Vinton Cerf, Yogen Dalal, and Carl Sunshine, “Specification of Internet Transmission Control Program,” December 1974, http://tools.ietf.org/html/rfc675. Without knowing a specific physical address, any computer on the network can ask for the owner of any IP address, and the TCP gateway will consult its directory of IP address listings to determine exactly which computer the requester is trying to contact. The development of this technology was an essential building block in the interlinking of networks, as computers could now communicate with each other without knowing the specific address of a recipient; the TCP gateway would figure it all out. In addition, the TCP gateway checks for errors and ensures that data reaches its destination uncorrupted. Today, this combination of TCP gateways and IP addresses is called TCP/IP and is essentially a worldwide phone book for every host on the Internet.

You’ve Got Mail: The Beginnings of the Electronic Mailbox

E-mail has, in one sense or another, been around for quite a while. Originally, electronic messages were recorded within a single mainframe computer system. Each person working on the computer would have a personal folder, so sending that person a message required nothing more than creating a new document in that person’s folder. It was just like leaving a note on someone’s desk,Ian Peter, “The History of Email,” The Internet History Project, 2004, http://www.nethistory.info/History%20of%20the%20Internet/email.html. so that the person would see it when he or she logged onto the computer.

However, once networks began to develop, things became slightly more complicated. Computer programmer Ray Tomlinson is credited with inventing the naming system we have today, using the @ symbol to denote the server (or host, from the previous section). In other words, name@gmail.com tells the host “gmail.com” (Google’s e-mail server) to drop the message into the folder belonging to “name.” Tomlinson is credited with writing the first network e-mail using his program SNDMSG in 1971. This invention of a simple standard for e-mail is often cited as one of the most important factors in the rapid spread of the Internet, and is still one of the most widely used Internet services.

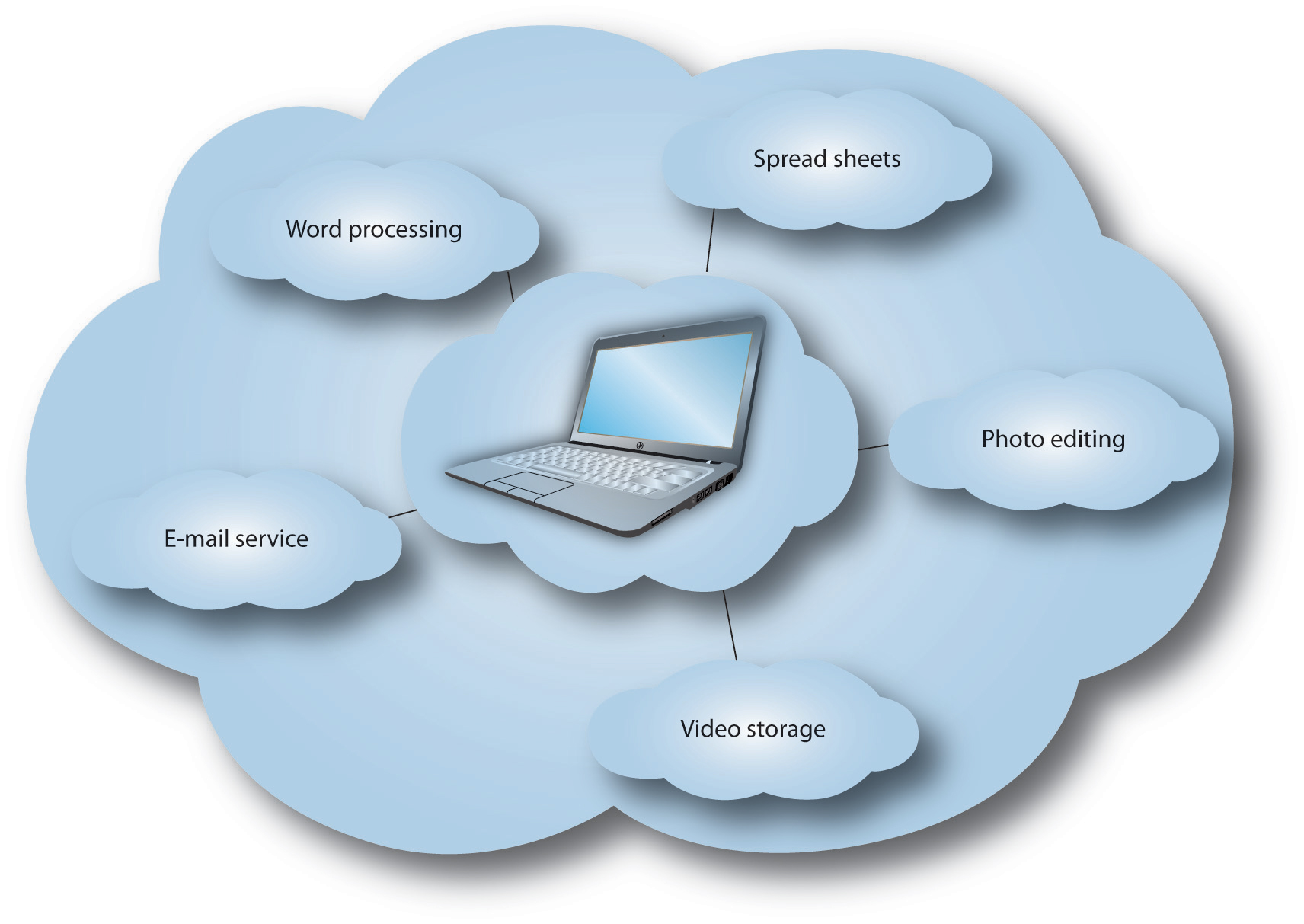

The use of e-mail grew in large part because of later commercial developments, especially America Online, that made connecting to e-mail much easier than it had been at its inception. Internet service providers (ISPs) packaged e-mail accounts with Internet access, and almost all web browsers (such as Netscape, discussed later in the section) included a form of e-mail service. In addition to the ISPs, e-mail services like Hotmail and Yahoo! Mail provided free e-mail addresses paid for by small text ads at the bottom of every e-mail message sent. These free “webmail” services soon expanded to comprise a large part of the e-mail services that are available today. Far from the original maximum inbox sizes of a few megabytes, today’s e-mail services, like Google’s Gmail service, generally provide gigabytes of free storage space.

E-mail has revolutionized written communication. The speed and relatively inexpensive nature of e-mail makes it a prime competitor of postal services—including FedEx and UPS—that pride themselves on speed. Communicating via e-mail with someone on the other end of the world is just as quick and inexpensive as communicating with a next-door neighbor. However, the growth of Internet shopping and online companies such as Amazon.com has in many ways made the postal service and shipping companies more prominent—not necessarily for communication, but for delivery and remote business operations.

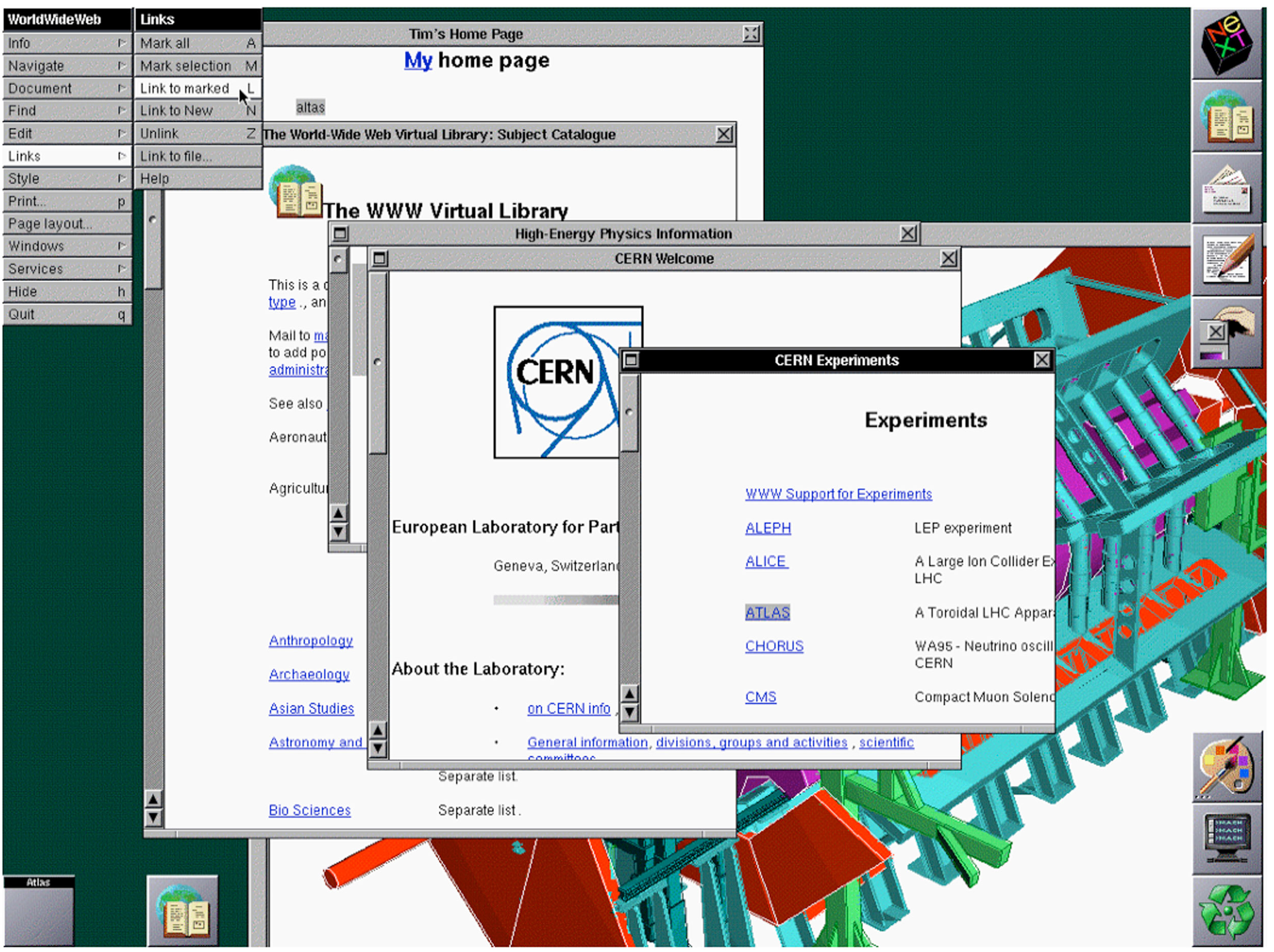

Hypertext: Web 1.0

In 1989, Tim Berners-Lee, a graduate of Oxford University and software engineer at CERN (the European particle physics laboratory), had the idea of using a new kind of protocol to share documents and information throughout the local CERN network. Instead of transferring regular text-based documents, he created a new language called hypertext markup language (HTML). Hypertext was a new word for text that goes beyond the boundaries of a single document. Hypertext can include links to other documents (hyperlinks), text-style formatting, images, and a wide variety of other components. The basic idea is that documents can be constructed out of a variety of links and can be viewed just as if they are on the user’s computer.

This new language required a new communication protocol so that computers could interpret it, and Berners-Lee decided on the name hypertext transfer protocol (HTTP). Through HTTP, hypertext documents can be sent from computer to computer and can then be interpreted by a browser, which turns the HTML files into readable web pages. The browser that Berners-Lee created, called World Wide Web, was a combination browser-editor, allowing users to view other HTML documents and create their own.Tim Berners-Lee, “The WorldWideWeb Browser,” 2009, http://www.w3.org/People/Berners-Lee/WorldWideWeb.

Figure 11.4

Tim Berners-Lee’s first web browser was also a web page editor.

Modern browsers, like Microsoft Internet Explorer and Mozilla Firefox, only allow for the viewing of web pages; other increasingly complicated tools are now marketed for creating web pages, although even the most complicated page can be written entirely from a program like Windows Notepad. The reason web pages can be created with the simplest tools is the adoption of certain protocols by the most common browsers. Because Internet Explorer, Firefox, Apple Safari, Google Chrome, and other browsers all interpret the same code in more or less the same way, creating web pages is as simple as learning how to speak the language of these browsers.

In 1991, the same year that Berners-Lee created his web browser, the Internet connection service Q-Link was renamed America Online, or AOL for short. This service would eventually grow to employ over 20,000 people, on the basis of making Internet access available (and, critically, simple) for anyone with a telephone line. Although the web in 1991 was not what it is today, AOL’s software allowed its users to create communities based on just about any subject, and it only required a dial-up modem—a device that connects any computer to the Internet via a telephone line—and the telephone line itself.

In addition, AOL incorporated two technologies—chat rooms and Instant Messenger—into a single program (along with a web browser). Chat rooms allowed many users to type live messages to a “room” full of people, while Instant Messenger allowed two users to communicate privately via text-based messages. The most important aspect of AOL was its encapsulation of all these once-disparate programs into a single user-friendly bundle. Although AOL was later disparaged for customer service issues like its users’ inability to deactivate their service, its role in bringing the Internet to mainstream users was instrumental.Tom Zeller, Jr., “Canceling AOL? Just Offer Your Firstborn,” New York Times, August 29, 2005, allhttp://www.nytimes.com/2005/08/29/technology/29link.html.

In contrast to AOL’s proprietary services, the World Wide Web had to be viewed through a standalone web browser. The first of these browsers to make its mark was the program Mosaic, released by the National Center for Supercomputing Applications at the University of Illinois. Mosaic was offered for free and grew very quickly in popularity due to features that now seem integral to the web. Things like bookmarks, which allow users to save the location of particular pages without having to remember them, and images, now an integral part of the web, were all inventions that made the web more usable for many people.National Center for Supercomputing Appliances, “About NCSA Mosaic,” 2010, http://www.ncsa.illinois.edu/Projects/mosaic.html.

Although the web browser Mosaic has not been updated since 1997, developers who worked on it went on to create Netscape Navigator, an extremely popular browser during the 1990s. AOL later bought the Netscape company, and the Navigator browser was discontinued in 2008, largely because Netscape Navigator had lost the market to Microsoft’s Internet Explorer web browser, which came preloaded on Microsoft’s ubiquitous Windows operating system. However, Netscape had long been converting its Navigator software into an open-source program called Mozilla Firefox, which is now the second-most-used web browser on the Internet (detailed in Table 11.1 "Browser Market Share (as of February 2010)").NetMarketShare, “Browser Market Share,” http://marketshare.hitslink.com/browser-market-share.aspx?qprid=0&qpcal=1&qptimeframe=M&qpsp=132. Firefox represents about a quarter of the market—not bad, considering its lack of advertising and Microsoft’s natural advantage of packaging Internet Explorer with the majority of personal computers.

Table 11.1 Browser Market Share (as of February 2010)

|

Browser |

Total Market Share |

|---|---|

|

Microsoft Internet Explorer |

62.12% |

|

Firefox |

24.43% |

|

Chrome |

5.22% |

|

Safari |

4.53% |

|

Opera |

2.38% |

|

Source: Courtesy Net Applications.com http://www.netapplications.com/ |

For Sale: The Web

As web browsers became more available as a less-moderated alternative to AOL’s proprietary service, the web became something like a free-for-all of startup companies. The web of this period, often referred to as Web 1.0, featured many specialty sites that used the Internet’s ability for global, instantaneous communication to create a new type of business. Another name for this free-for-all of the 1990s is the “dot-com boom.” During the boom, it seemed as if almost anyone could build a website and sell it for millions of dollars. However, the “dot-com crash” that occurred later that decade seemed to say otherwise. Quite a few of these Internet startup companies went bankrupt, taking their shareholders down with them. Alan Greenspan, then the chairman of the U.S. Federal Reserve, called this phenomenon “irrational exuberance,”Alan Greenspan, “The Challenge of Central Banking in a Democratic Society, ” (lecture, American Enterprise Institute for Public Policy Research, Washington, DC, December 5, 1996), http://www.federalreserve.gov/boarddocs/speeches/1996/19961205.htm. in large part because investors did not necessarily know how to analyze these particular business plans, and companies that had never turned a profit could be sold for millions. The new business models of the Internet may have done well in the stock market, but they were not necessarily sustainable. In many ways, investors collectively failed to analyze the business prospects of these companies, and once they realized their mistakes (and the companies went bankrupt), much of the recent market growth evaporated. The invention of new technologies can bring with it the belief that old business tenets no longer apply, but this dangerous belief—the “irrational exuberance” Greenspan spoke of—is not necessarily conducive to long-term growth.

Some lucky dot-com businesses formed during the boom survived the crash and are still around today. For example, eBay, with its online auctions, turned what seemed like a dangerous practice (sending money to a stranger you met over the Internet) into a daily occurrence. A less-fortunate company, eToys.com, got off to a promising start—its stock quadrupled on the day it went public in 1999—but then filed for Chapter 11 "The Internet and Social Media" bankruptcy in 2001.Cecily Barnes, “eToys files for Chapter 11,” CNET, March 7, 2001, http://news.cnet.com/2100-1017-253706.html.

One of these startups, theGlobe.com, provided one of the earliest social networking services that exploded in popularity. When theGlobe.com went public, its stock shot from a target price of $9 to a close of $63.50 a share.Dawn Kawamoto, “TheGlobe.com’s IPO one for the books,” CNET, November 13, 1998, http://news.cnet.com/2100-1023-217913.html. The site itself was started in 1995, building its business on advertising. As skepticism about the dot-com boom grew and advertisers became increasingly skittish about the value of online ads, theGlobe.com ceased to be profitable and shut its doors as a social networking site.theglobe.com, “About Us,” 2009, http://www.theglobe.com/. Although advertising is pervasive on the Internet today, the current model—largely based on the highly targeted Google AdSense service—did not come around until much later. In the earlier dot-com years, the same ad might be shown on thousands of different web pages, whereas now advertising is often specifically targeted to the content of an individual page.

However, that did not spell the end of social networking on the Internet. Social networking had been going on since at least the invention of Usenet in 1979 (detailed later in the chapter), but the recurring problem was always the same: profitability. This model of free access to user-generated content departed from almost anything previously seen in media, and revenue streams would have to be just as radical.

The Early Days of Social Media

The shared, generalized protocols of the Internet have allowed it to be easily adapted and extended into many different facets of our lives. The Internet shapes everything, from our day-to-day routine—the ability to read newspapers from around the world, for example—to the way research and collaboration are conducted. There are three important aspects of communication that the Internet has changed, and these have instigated profound changes in the way we connect with one another socially: the speed of information, the volume of information, and the “democratization” of publishing, or the ability of anyone to publish ideas on the web.

One of the Internet’s largest and most revolutionary changes has come about through social networking. Because of Twitter, we can now see what all our friends are doing in real time; because of blogs, we can consider the opinions of complete strangers who may never write in traditional print; and because of Facebook, we can find people we haven’t talked to for decades, all without making a single awkward telephone call.

Recent years have seen an explosion of new content and services; although the phrase “social media” now seems to be synonymous with websites like Facebook and Twitter, it is worthwhile to consider all the ways a social media platform affects the Internet experience.

How Did We Get Here? The Late 1970s, Early 1980s, and Usenet

Almost as soon as TCP stitched the various networks together, a former DARPA scientist named Larry Roberts founded the company Telnet, the first commercial packet-switching company. Two years later, in 1977, the invention of the dial-up modem (in combination with the wider availability of personal computers like the Apple II) made it possible for anyone around the world to access the Internet. With availability extended beyond purely academic and military circles, the Internet quickly became a staple for computer hobbyists.

One of the consequences of the spread of the Internet to hobbyists was the founding of Usenet. In 1979, University of North Carolina graduate students Tom Truscott and Jim Ellis connected three computers in a small network and used a series of programming scripts to post and receive messages. In a very short span of time, this system spread all over the burgeoning Internet. Much like an electronic version of community bulletin boards, anyone with a computer could post a topic or reply on Usenet.

The group was fundamentally and explicitly anarchic, as outlined by the posting “What is Usenet?” This document says, “Usenet is not a democracy…there is no person or group in charge of Usenet …Usenet cannot be a democracy, autocracy, or any other kind of ‘-acy.’”Mark Moraes, Chip Salzenberg, and Gene Spafford, “What is Usenet?” December 28, 1999, http://www.faqs.org/faqs/usenet/what-is/part1/. Usenet was not used only for socializing, however, but also for collaboration. In some ways, the service allowed a new kind of collaboration that seemed like the start of a revolution: “I was able to join rec.kites and collectively people in Australia and New Zealand helped me solve a problem and get a circular two-line kite to fly,” one user told the United Kingdom’s Guardian.Simon Jeffery and others, “A People’s History of the Internet: From Arpanet in 1969 to Today,” Guardian (London), October 23, 2009, http://www.guardian.co.uk/technology/interactive/2009/oct/23/internet-arpanet.

GeoCities: Yahoo! Pioneers

Fast-forward to 1995: The president and founder of Beverly Hills Internet, David Bohnett, announces that the name of his company is now “GeoCities.” GeoCities built its business by allowing users (“homesteaders”) to create web pages in “communities” for free, with the stipulation that the company placed a small advertising banner at the top of each page. Anyone could register a GeoCities site and subsequently build a web page about a topic. Almost all of the community names, like Broadway (live theater) and Athens (philosophy and education), were centered on specific topics.While GeoCities is no longer in business, the Internet Archive maintains the site at http://www.archive.org/web/geocities.php. Information taken from December 21, 1996.

This idea of centering communities on specific topics may have come from Usenet. In Usenet, the domain alt.rec.kites refers to a specific topic (kites) within a category (recreation) within a larger community (alternative topics). This hierarchical model allowed users to organize themselves across the vastness of the Internet, even on a large site like GeoCities. The difference with GeoCities was that it allowed users to do much more than post only text (the limitation of Usenet), while constraining them to a relatively small pool of resources. Although each GeoCities user had only a few megabytes of web space, standardized pictures—like mailbox icons and back buttons—were hosted on GeoCities’s main server. GeoCities was such a large part of the Internet, and these standard icons were so ubiquitous, that they have now become a veritable part of the Internet’s cultural history. The Web Elements category of the site Internet Archaeology is a good example of how pervasive GeoCities graphics became.Internet Archaeology, 2010, http://www.internetarchaeology.org/swebelements.htm.

GeoCities built its business on a freemium model, where basic services are free but subscribers pay extra for things like commercial pages or shopping carts. Other Internet businesses, like Skype and Flickr, use the same model to keep a vast user base while still profiting from frequent users. Since loss of online advertising revenue was seen as one of the main causes of the dot-com crash, many current web startups are turning toward this freemium model to diversify their income streams.Claire Cain Miller, “Ad Revenue on the Web? No Sure Bet,” New York Times, May 24, 2009, http://www.nytimes.com/2009/05/25/technology/start-ups/25startup.html.

GeoCities’s model was so successful that the company Yahoo! bought it for $3.6 billion at its peak in 1999. At the time, GeoCities was the third-most-visited site on the web (behind Yahoo! and AOL), so it seemed like a sure bet. A decade later, on October 26, 2009, Yahoo! closed GeoCities for good in every country except Japan.

Diversification of revenue has become one of the most crucial elements of Internet businesses; from The Wall Street Journal online to YouTube, almost every website is now looking for multiple income streams to support its services.

Key Takeaways

- The two primary characteristics of the original Internet were decentralization and free, open protocols that anyone could use. As a result of its decentralized “web” model of organization, the Internet can store data in many different places at once. This makes it very useful for backing up data and very difficult to destroy data that might be unwanted. Protocols play an important role in this, because they allow some degree of control to exist without a central command structure.

- Two of the most important technological developments were the personal computer (such as the Apple II) and the dial-up modem, which allowed anyone with a phone line to access the developing Internet. America Online also played an important role, making it very easy for practically anyone with a computer to use the Internet. Another development, the web browser, allowed for access to and creation of web pages all over the Internet.

- With the advent of the web browser, it seemed as if anyone could make a website that people wanted to use. The problem was that these sites were driven largely by venture capital and grossly inflated initial public offerings of their stock. After failing to secure any real revenue stream, their stock plummeted, the market crashed, and many of these companies went out of business. In later years, companies tried to diversify their investments, particularly by using a “freemium” model of revenue, in which a company would both sell premium services and advertise, while offering a free pared-down service to casual users.

Exercises

Websites have many different ways of paying for themselves, and this can say a lot about both the site and its audience. The business models of today’s websites may also directly reflect the lessons learned during the early days of the Internet. Start this exercise by reviewing a list of common ways that websites pay for themselves, how they arrived at these methods, and what it might say about them:

- Advertising: The site probably has many casual viewers and may not necessarily be well established. If there are targeted ads (such as ads directed toward stay-at-home parents with children), then it is possible the site is successful with a small audience.

- Subscription option: The site may be a news site that prides itself on accuracy of information or lack of bias, whose regular readers are willing to pay a premium for the guarantee of quality material. Alternately, the site may cater to a small demographic of Internet users by providing them with exclusive, subscription-only content.

- Selling services: Online services, such as file hosting, or offline services and products are probably the clearest way to determine a site’s revenue stream. However, these commercial sites often are not prized for their unbiased information, and their bias can greatly affect the content on the site.

Choose a website that you visit often, and list which of these revenue streams the site might have. How might this affect the content on the site? Is there a visible effect, or does the site try to hide it? Consider how events during the early history of the Internet may have affected the way the site operates now. Write down a revenue stream that the site does not currently have and how the site designers might implement such a revenue stream.

11.2 Social Media and Web 2.0

Learning Objectives

- Identify the major social networking sites, and give possible uses and demographics for each one.

- Show the positive and negative effects of blogs on the distribution and creation of information.

- Explain the ways privacy has been addressed on the Internet.

- Identify new information that marketers can use because of social networking.

Although GeoCities lost market share, and although theGlobe.com never really made it to the 21st century, social networkingA website that provides a way for people to interact with each other. Often, this involves “friends” and some method of communication, whether through photos, videos, or text. has persisted. There are many different types of social mediaAny social networking service. This is a blanket term for person-to-person connections on the Internet, unlike TV, radio, or newspaper. available today, from social networking sites like Facebook to blogging services like Blogger and WordPress.com. All these sites bring something different to the table, and a few of them even try to bring just about everything to the table at once.

Social Networking

Social networking services—like Facebook, Twitter, LinkedInA social networking service that caters to business professionals looking for networking opportunities., Google BuzzGoogle’s social networking service that is built into its Gmail service., and MySpace—provide a limited but public platform for users to create a “profile.” This can range anywhere from the 140-character (that’s letters and spaces, not words) “tweets” on Twitter, to the highly customizable MySpace, which allows users to blog, customize color schemes, add background images, and play music. Each of these services has its key demographic—MySpace, for example, is particularly geared toward younger users. Its huge array of features made it attractive to this demographic at first, but eventually it was overrun with corporate marketing and solicitations for pornographic websites, leading many users to abandon the service. In addition, competing social networking sites like Facebook offer superior interfaces that have lured away many of MySpace’s users. MySpace has attempted to catch up by upgrading its own interface, but it now faces the almost insurmountable obstacle of already-satisfied users of competing social networking services. As Internet technology evolves rapidly, most users have few qualms about moving to whichever site offers the better experience; most users have profiles and accounts on many services at once. But as relational networks become more and more established and concentrated on a few social media sites, it becomes increasingly difficult for newcomers and lagging challengers to offer the same rich networking experience. For a Facebook user with hundreds of friends in his or her social network, switching to MySpace and bringing along his or her entire network of friends is a daunting and infeasible prospect. Google has attempted to circumvent the problem of luring users to create new social networks by building its Buzz service into its popular Gmail, ensuring that Buzz has a built-in user base and lowering the social costs of joining a new social network by leveraging users’ Gmail contact lists. It remains to be seen if Google will be truly successful in establishing a vital new social networking service, but its tactic of integrating Buzz into Gmail underscores how difficult it has become to compete with established social networks like Twitter and Facebook.

Whereas MySpace initially catered to a younger demographic, LinkedIn caters to business professionals looking for networking opportunities. LinkedIn is free to join and allows users to post resumes and job qualifications (rather than astrological signs and favorite TV shows). Its tagline, “Relationships matter,” emphasizes the role of an increasingly networked world in business; just as a musician might use MySpace to promote a new band, a LinkedIn user can use the site to promote professional services. While these two sites have basically the same structure, they fulfill different purposes for different social groups; the character of social networking is highly dependent on the type of social circle.

Twitter offers a different approach to social networking, allowing users to “tweet” 140-character messages to their “followers,” making it something of a hybrid of instant messaging and blogging. Twitter is openly searchable, meaning that anyone can visit the site and quickly find out what other Twitter users are saying about any subject. Twitter has proved useful for journalists reporting on breaking news, as well as highlighting the “best of” the Internet. Twitter has also been useful for marketers looking for a free public forum to disseminate marketing messages. It became profitable in December 2009 through a $25 million deal allowing Google and Microsoft to display its users’ 140-character messages in their search results.Eliot Van Buskirk, “Twitter Earns First Profit Selling Search to Google, Microsoft,” Wired, December 21, 2009, http://www.wired.com/epicenter/2009/12/twitter-earns-first-profit-selling-search-to-google-microsoft. Facebook, originally deployed exclusively to Ivy League schools, has since opened its doors to anyone over 13 with an e-mail account. With the explosion of the service and its huge growth among older demographics, “My parents joined Facebook” has become a common complaint.See the blog at http://myparentsjoinedfacebook.com/ for examples on the subject.

Another category of social media, blogs began as an online, public version of a diary or journal. Short for “web logs,” these personal sites give anyone a platform to write about anything they want to. Posting tweets on the Twitter service is considered micro-blogging (because of the extremely short length of the posts). Some services, like LiveJournal, highlight their ability to provide up-to-date reports on personal feelings, even going so far as to add a “mood” shorthand at the end of every post. The Blogger service (now owned by Google) allows users with Google accounts to follow friends’ blogs and post comments. WordPress.com, the company that created the open-source blogging platform WordPress.org, and LiveJournal both follow the freemium model by allowing a basic selection of settings for free, with the option to pay for things like custom styles and photo hosting space. What these all have in common, however, is their bundling of social networking (such as the ability to easily link to and comment on friends’ blogs) with an expanded platform for self-expression. At this point, most traditional media companies have incorporated blogs, Twitter, and other social media as a way to allow their reporters to update instantly and often. This form of media convergence, discussed in detail in Section 11.3 "The Effects of the Internet and Globalization on Popular Culture and Interpersonal Communication" of this chapter, is now a necessary part of doing business.

There are many other types of social media out there, many of which can be called to mind with a single name: YouTube (video sharing), Wikipedia (open-source encyclopedia composed of “wikis” editable by any user), Flickr (photo sharing), and Digg (content sharing). Traditional media outlets have begun referring to these social media services and others like them as “Web 2.0.” Web 2.0 is not a new version of the web; rather, the term is a reference to the increased focus on user-generated content and social interaction on the web, as well as the evolution of online tools to facilitate that focus. Instead of relying on professional reporters to get information about a protest in Iran, a person could just search for “Iran” on Twitter and likely end up with hundreds of tweets linking to everything from blogs to CNN.com to YouTube videos from Iranian citizens themselves. In addition, many of these tweets may actually be instant updates from people using Twitter in Iran. This allows people to receive information straight from the source, without being filtered through news organizations or censored by governments.

Going Viral

In 2009, Susan Boyle, an unemployed middle-aged Scottish woman, appeared on Britain’s Got Talent and sang “I Dreamed a Dream” from the musical Les Miserables, becoming an international star almost overnight. It was not her performance itself that catapulted her to fame and sent her subsequently released album to the top of the UK Billboard charts and kept it there for 6 weeks. What did it was a YouTube video of her performance, viewed by 87,000,000 people and counting.BritainsSoTalented, “Susan Boyle - Singer - Britains Got Talent 2009,” 2009, http://www.youtube.com/watch?v=9lp0IWv8QZY.

Figure 11.5

Susan Boyle turned from a successful TV contestant into an international celebrity when the YouTube video of her performance went viral.

Media that is spread from person to person when, for example, a friend sends you a link saying “You’ve got to see this!” is said to have “gone viralWhen a video, news story, or photo is e-mailed or sent from person to person, without any direction from a mainstream source..” Marketing and advertising agencies have deemed advertising that makes use of this phenomenon as “viral marketingAn attempt by marketers to produce things that “go viral” in order to build hype for a product..” Yet many YouTube sensations have not come from large marketing firms. For instance, the four-piece pop-punk band OK Go filmed a music video on a tiny budget for their song “Here It Goes Again” and released it exclusively on YouTube in 2006. Featuring a choreographed dance done on eight separate treadmills, the video quickly became a viral sensation and, as of May 2011, has over 7,265,825 views. The video helped OK Go attract millions of new fans and earned them a Grammy award in 2007, making it one of the most notable successes of viral Internet marketing. Viral marketing is, however, notoriously unpredictable and is liable to spawn remixes, spinoffs, and spoofs that can dilute or damage the messages that marketers intend to spread. Yet, when it is successful, viral marketing can reach millions of people for very little money and can even make it into the mainstream news.

Recent successes and failures in viral marketing demonstrate how difficult it is for marketers to control their message as it is unleashed virally. In 2007, the band Radiohead released their album In Rainbows online, allowing fans to download it for any amount of money they chose—including for free. Despite practically giving the album away, the digital release of In Rainbows still pulled in more money than Radiohead’s previous album, Hail to the Thief, while the band simultaneously sold a huge number of $80 collector editions and still sold physical CDs months after the digital release became available.New Musical Express, “Radiohead Reveal How Successful ‘In Rainbows’ Download Really Was,” October 15, 2008, http://www.nme.com/news/radiohead/40444. In contrast, the food giant Healthy Choice enlisted Classymommy.com blogger Colleen Padilla to write a sponsored review of its product, leading to a featured New York Times article on the blogger (not the product), which gave the product only a passing mention.Pradnya Joshi, “Approval by a Blogger May Please a Sponsor,” New York Times, July 12, 2009, http://www.nytimes.com/2009/07/13/technology/internet/13blog.html. Often, a successfully marketed product will reach some people through the Internet and then break through into the mainstream media. Yet as the article about Padilla shows, sometimes the person writing about the product overshadows the product itself.

Not all viral media is marketing, however. In 2007, someone posted a link to a new trailer for Grand Theft Auto IV on the video games message board of the web forum 4chan.org. When users followed the link, they were greeted not with a video game trailer but with Rick Astley singing his 1987 hit “Never Gonna Give You Up.” This technique—redirecting someone to that particular music video—became known as Rickrolling and quickly became one of the most well-known Internet memesAny catchphrase, video, or piece of media that becomes a cultural symbol on the Internet. of all time.Fox News, “The Biggest Little Internet Hoax on Wheels Hits Mainstream,” April 22, 2008, http://www.foxnews.com/story/0,2933,352010,00.html. An Internet meme is a concept that quickly replicates itself throughout the Internet, and it is often nonsensical and absurd. Another meme, “Lolcats,” consists of misspelled captions—“I can has cheezburger?” is a classic example—over pictures of cats. Often, these memes take on a metatextual quality, such as the meme “Milhouse is not a meme,” in which the character Milhouse (from the TV show The Simpsons) is told that he is not a meme. Chronicling memes is notoriously difficult, because they typically spring into existence seemingly overnight, propagate rapidly, and disappear before ever making it onto the radar of mainstream media—or even the mainstream Internet user.

Benefits and Problems of Social Media

Social media allows an unprecedented volume of personal, informal communication in real time from anywhere in the world. It allows users to keep in touch with friends on other continents, yet keeps the conversation as casual as a Facebook wall post. In addition, blogs allow us to gauge a wide variety of opinions and have given “breaking news” a whole new meaning. Now, news can be distributed through many major outlets almost instantaneously, and different perspectives on any one event can be aired concurrently. In addition, news organizations can harness bloggers as sources of real-time news, in effect outsourcing some of their news-gathering efforts to bystanders on the scene. This practice of harnessing the efforts of several individuals online to solve a problem is known as crowdsourcing.

The downside of the seemingly infinite breadth of online information is that there is often not much depth to the coverage of any given topic. The superficiality of information on the Internet is a common gripe among many journalists who are now rushed to file news reports several times a day in an effort to complete with the “blogosphere,” or the crowd of bloggers who post both original news stories and aggregate previously published news from other sources. Whereas traditional print organizations at least had the “luxury” of the daily print deadline, now journalists are expected to blog or tweet every story and file reports with little or no analysis, often without adequate time to confirm the reliability of their sources.Ken Auletta, “Non-Stop News,” Annals of Communications, New Yorker, January 25, 2010, http://www.newyorker.com/reporting/2010/01/25/100125fa_fact_auletta.

Additionally, news aggregatorsServices like Google News that aggregate stories from major professional news sources and present them in a streamlined format. like Google News profit from linking to journalists’ stories at major newspapers and selling advertising, but these profits are not shared with the news organizations and journalists who created the stories. It is often difficult for journalists to keep up with the immediacy of the nonstop news cycle, and with revenues for their efforts being diverted to news aggregators, journalists and news organizations increasingly lack the resources to keep up this fast pace. Twitter presents a similar problem: Instead of getting news from a specific newspaper, many people simply read the articles that are linked from a Twitter feed. As a result, the news cycle leaves journalists no time for analysis or cross-examination. Increasingly, they will simply report, for example, on what a politician or public relations representative says without following up on these comments or fact-checking them. This further shortens the news cycle and makes it much easier for journalists to be exploited as the mouthpieces of propaganda.

Consequently, the very presence of blogs and their seeming importance even among mainstream media has made some critics wary. Internet entrepreneur Andrew Keen is one of these people, and his book The Cult of the Amateur follows up on the famous thought experiment suggesting that infinite monkeys, given infinite typewriters, will one day randomly produce a great work of literature:Proposed by T. H. Huxley (the father of Aldous Huxley), this thought experiment suggests that infinite monkeys given infinite typewriters would, given infinite time, eventually write Hamlet. “In our Web 2.0 world, the typewriters aren’t quite typewriters, but rather networked personal computers, and the monkeys aren’t quite monkeys, but rather Internet users.”Andrew Keen, The Cult of the Amateur: How Today’s Internet Is Killing Our Culture (New York: Doubleday, 2007). Keen also suggests that the Internet is really just a case of my-word-against-yours, where bloggers are not required to back up their arguments with credible sourcesGenerally, any source with authorial or editorial backing. Anonymous sources, or people who do not give their real names, are often not credible, unless they are vouched for by a known credible source.. “These days, kids can’t tell the difference between credible news by objective professional journalists and what they read on [a random website].”Ibid. Follow Keen on Twitter: http://twitter.com/ajkeen. Commentators like Keen worry that this trend will lead to young people’s inability to distinguish credible information from a mass of sources, eventually leading to a sharp decrease in credible sources of information.

For defenders of the Internet, this argument seems a bit overwrought: “A legitimate interest in the possible effects of significant technological change in our daily lives can inadvertently dovetail seamlessly into a ‘kids these days’ curmudgeonly sense of generational degeneration, which is hardly new.”Greg Downey, “Is Facebook Rotting Our Children’s Brains?” Neuroanthropology.net, March 2, 2009, http://neuroanthropology.net/2009/03/02/is-facebook-rotting-our-childrens-brains/. Greg Downey, who runs the collaborative blog Neuroanthropology, says that fear of kids on the Internet—and on social media in particular—can slip into “a ‘one-paranoia-fits-all’ approach to technological change.” For the argument that online experiences are “devoid of cohesive narrative and long-term significance,” Downey offers that, on the contrary, “far from evacuating narrative, some social networking sites might be said to cause users to ‘narrativize’ their experience, engaging with everyday life already with an eye toward how they will represent it on their personal pages.”

Another argument in favor of social media defies the warning that time spent on social networking sites is destroying the social skills of young people. “The debasement of the word ‘friend’ by [Facebook’s] use of it should not make us assume that users can’t tell the difference between friends and Facebook ‘friends,’” writes Downey. On the contrary, social networks (like the Usenet of the past) can even provide a place for people with more obscure interests to meet one another and share commonalities. In addition, marketing through social media is completely free—making it a valuable tool for small businesses with tight marketing budgets. A community theater can invite all of its “fans” to a new play for less money than putting an ad in the newspaper, and this direct invitation is far more personal and specific. Many people see services like Twitter, with its “followers,” as more semantically appropriate than the “friends” found on Facebook and MySpace, and because of this Twitter has, in many ways, changed yet again the way social media is conceived. Rather than connecting with “friends,” Twitter allows social media to be purely a source of information, thereby making it far more appealing to adults. In addition, while 140 characters may seem like a constraint to some, it can be remarkably useful to the time-strapped user looking to catch up on recent news.

Social media’s detractors also point to the sheer banality of much of the conversation on the Internet. Again, Downey keeps this in perspective: “The banality of most conversation is also pretty frustrating,” he says. Downey suggests that many of the young people using social networking tools see them as just another aspect of communication. However, Downey warns that online bullying has the potential to pervade larger social networks while shielding perpetrators through anonymity.

Another downside of many of the Internet’s segmented communities is that users tend to be exposed only to information they are interested in and opinions they agree with. This lack of exposure to novel ideas and contrary opinions can create or reinforce a lack of understanding among people with different beliefs, and make political and social compromise more difficult to come by.

While the situation may not be as dire as Keen suggests in his book, there are clearly some important arguments to consider regarding the effects of the web and social media in particular. The main concerns come down to two things: the possibility that the volume of amateur, user-generated content online is overshadowing better-researched sources, and the questionable ability of users to tell the difference between the two.

Education, the Internet, and Social Media

Although Facebook began at Harvard University and quickly became popular among the Ivy League colleges, the social network has since been lambasted as a distraction for students. Instead of studying, the argument claims, students will sit in the library and browse Facebook, messaging their friends and getting nothing done. Two doctoral candidates, Aryn Karpinski (Ohio State University) and Adam Duberstein (Ohio Dominican University), studied the effects of Facebook use on college students and found that students who use Facebook generally receive a full grade lower—a half point on the GPA scale—than students who do not.Anita Hamilton, “What Facebook Users Share: Lower Grades,” Time, April 14, 2009, http://www.time.com/time/business/article/0,8599,1891111,00.html. Correlation does not imply causation, though, as Karpinski said that Facebook users may just be “prone to distraction.”

On the other hand, students’ access to technology and the Internet may allow them to pursue their education to a greater degree than they could otherwise. At a school in Arizona, students are issued laptops instead of textbooks, and some of their school buses have Wi-Fi Internet access. As a result, bus rides, including the long trips that are often a requirement of high school sports, are spent studying. Of course, the students had laptops long before their bus rides were connected to the Internet, but the Wi-Fi technology has “transformed what was often a boisterous bus ride into a rolling study hall.”Sam Dillon, “Wi-Fi Turns Rowdy Bus Into Rolling Study Hall,” New York Times, February 11, 2010, http://www.nytimes.com/2010/02/12/education/12bus.html. Even though not all students studied all the time, enabling students to work on bus rides fulfilled the school’s goal of extending the educational hours beyond the usual 8 a.m. to 3 p.m.

Privacy Issues With Social Networking

Social networking provides unprecedented ways to keep in touch with friends, but that ability can sometimes be a double-edged sword. Users can update friends with every latest achievement—“[your name here] just won three straight games of solitaire!”—but may also unwittingly be updating bosses and others from whom particular bits of information should be hidden.

The shrinking of privacy online has been rapidly exacerbated by social networks, and for a surprising reason: conscious decisions made by participants. Putting personal information online—even if it is set to be viewed by only select friends—has become fairly standard. Dr. Kieron O’Hara studies privacy in social media and calls this era “intimacy 2.0,”Zoe Kleinman, “How Online Life Distorts Privacy Rights for All,” BBC News, January 8, 2010, http://news.bbc.co.uk/2/hi/technology/8446649.stm. a riff on the buzzword “Web 2.0.” One of O’Hara’s arguments is that legal issues of privacy are based on what is called a “reasonable standard.” According to O’Hara, the excessive sharing of personal information on the Internet by some constitutes an offense to the privacy of all, because it lowers the “reasonable standard” that can be legally enforced. In other words, as cultural tendencies toward privacy degrade on the Internet, it affects not only the privacy of those who choose to share their information, but also the privacy of those who do not.

Privacy Settings on Facebook

With over 500 million users, it is no surprise that Facebook is one of the upcoming battlegrounds for privacy on the Internet. When Facebook updated its privacy settings in 2009 for these people, “privacy groups including the American Civil Liberties Union…[called] the developments ‘flawed’ and ‘worrisome,’” reported The Guardian in late 2009.Bobbie Johnson, “Facebook Privacy Change Angers Campaigners,” Guardian (London), December 10, 2009, http://www.guardian.co.uk/technology/2009/dec/10/facebook-privacy.

Mark Zuckerberg, the founder of Facebook, discusses privacy issues on a regular basis in forums ranging from his official Facebook blog to conferences. At the Crunchies Awards in San Francisco in early 2010, Zuckerberg claimed that privacy was no longer a “social norm.”Bobbie Johnson, “Privacy No Longer a Social Norm, Says Facebook Founder,” Guardian (London), January 11, 2010, http://www.guardian.co.uk/technology/2010/jan/11/facebook-privacy. This statement follows from his company’s late-2009 decision to make public information sharing the default setting on Facebook. Whereas users were previously able to restrict public access to basic profile information like their names and friends, the new settings make this information publicly available with no option to make it private. Although Facebook publicly announced the changes, many outraged users first learned of the updates to the default privacy settings when they discovered—too late—that they had inadvertently broadcast private information. Facebook argues that the added complexity of the privacy settings gives users more control over their information. However, opponents counter that adding more complex privacy controls while simultaneously making public sharing the default setting for those controls is a blatant ploy to push casual users into sharing more of their information publicly—information that Facebook will then use to offer more targeted advertising.Kevin Bankston, “Facebook’s New Privacy Changes: The Good, the Bad, and the Ugly,” Deeplinks Blog, Electronic Frontier Foundation, December 9, 2009, http://www.eff.org/deeplinks/2009/12/facebooks-new-privacy-changes-good-bad-and-ugly.

In response to the privacy policy, many users have formed their own grassroots protest groups within Facebook. In response to critiques, Facebook changed its privacy policy again in May 2010 with three primary changes. First, privacy controls are simpler. Instead of various controls on multiple pages, there is now one main control users can use to determine who can see their information. Second, Facebook made less information publicly available. Public information is now limited to basic information, such as a user’s name and profile picture. Finally, it is now easier to block applications and third-party websites from accessing user information.Maggie Lake, “Facebook’s privacy changes,” CNN, June 2, 2010, http://www.cnn.com/video/#/video/tech/2010/05/27/lake.facebook.pr.

Similar to the Facebook controversy, Google’s social networking Gmail add-on called Buzz automatically signed up Gmail users to “follow” the most e-mailed Gmail users in their address book. Because all of these lists were public by default, users’ most e-mailed contacts were made available for anyone to see. This was especially alarming for people like journalists who potentially had confidential sources exposed to a public audience. However, even though this mistake—which Google quickly corrected—created a lot of controversy around Buzz, it did not stop users from creating over 9 million posts in the first 2 days of the service.Todd Jackson, “Millions of Buzz users, and improvements based on your feedback,” Official Gmail Blog, February 11, 2010, http://gmailblog.blogspot.com/2010/02/millions-of-buzz-users-and-improvements.html. Google’s integration of Buzz into its Gmail service may have been upsetting to users not accustomed to the pitfalls of social networking, but Google’s misstep has not discouraged millions of others from trying the service, perhaps due to their experience dealing with Facebook’s ongoing issues with privacy infringement.

For example, Facebook’s old privacy settings integrated a collection of applications (written by third-party developers) that included everything from “Which American Idol Contestant Are You?” to an “Honesty Box” that allows friends to send anonymous criticism. “Allowing Honesty Box access will let it pull your profile information, photos, your friends’ info, and other content that it requires to work,” reads the disclaimer on the application installation page. The ACLU drew particular attention to the “app gap” that allowed “any quiz or application run by you to access information about you and your friends.”Nicole Ozer, “Facebook Privacy in Transition - But Where Is It Heading?” ACLU of Northern California, December 9, 2009, http://www.aclunc.org/issues/technology/blog/facebook_privacy_in_transition_-_but_where_is_it_heading.shtml. In other words, merely using someone else’s Honesty Box gave the program information about your “religion, sexual orientation, political affiliation, pictures, and groups.”Ibid. There are many reasons that unrelated applications may want to collect this information, but one of the most prominent is, by now, a very old story: selling products. The more information a marketer has, the better he or she can target a message, and the more likely it is that the recipient will buy something.

Figure 11.6

Zynga, one of the top social game developers on Facebook, created the game FarmVille. Because FarmVille is ad-supported and gives users the option to purchase Farmville virtual currency with actual money, the game is free and accessible for everyone to play.

Social Media’s Effect on Commerce

Social media on the Internet has been around for a while, and it has always been of some interest to marketers. The ability to target advertising based on demographic information given willingly to the service—age, political preference, gender, and location—allows marketers to target advertising extremely efficiently. However, by the time Facebook’s population passed the 350-million mark, marketers were scrambling to harness social media. The increasingly difficult-to-reach younger demographic has been rejecting radios for Apple’s iPod mobile digital devices and TV for YouTube. Increasingly, marketers are turning to social networks as a way to reach these consumers. Culturally, these developments indicate a mistrust among consumers of traditional marketing techniques; marketers must now use new and more personalized ways of reaching consumers if they are going to sell their products.

The attempts of marketers to harness the viral spread of media on the Internet have already been discussed earlier in the chapter. Marketers try to determine the trend of things “going viral,” with the goal of getting millions of YouTube views; becoming a hot topic on Google Trends, a website that measures the most frequently searched topics on the web; or even just being the subject of a post on a well-known blog. For example, Procter & Gamble sent free samples of its Swiffer dust mop to stay-at-home-mom bloggers with a large online audience. And in 2008, the movie College (or College: The Movie) used its tagline “Best.Weekend.Ever.” as the prompt for a YouTube video contest. Contestants were invited to submit videos of their best college weekend ever, and the winner received a monetary prize.Jon Hickey, “Best Weekend Ever,” 2008, http://www.youtube.com/watch?v=pldG8MdEIOA.

What these two instances of marketing have in common is that they approach people who are already doing something they enjoy doing—blogging or making movies—and give them a relatively small amount of compensation for providing advertising. This differs from methods of traditional advertising because marketers seek to bridge a credibility gap with consumers. Marketers have been doing this for ages—long before breakfast cereal slogans like “Kid Tested, Mother Approved” or “Mikey likes it” ever hit the airwaves. The difference is that now the people pushing the products can be friends or family members, all via social networks.

For instance, in 2007, a program called Beacon was launched as part of Facebook. With Beacon, a Facebook user is confronted with the option to “share” an online purchase from partnering sites. For example, a user might buy a book from Amazon.com and check the corresponding “share” box in the checkout process, and all of his or her friends will receive a message notifying them that this person purchased and recommends this particular product. Explaining the reason for this shift in a New York Times article, Mark Zuckerberg said, “Nothing influences a person more than a trusted friend.”Louise Story, “Facebook Is Marketing Your Brand Preferences (With Your Permission),” New York Times, November 7, 2007, http://www.nytimes.com/2007/11/07/technology/07adco.html. However, many Facebook users did not want their purchasing information shared with other Facebookers, and the service was shut down in 2009 and subsequently became the subject of a class action lawsuit. Facebook’s troubles with Beacon illustrate the thin line between taking advantage of the tremendous marketing potential of social media and violating the privacy of users.

Facebook’s questionable alliance with marketers through Beacon was driven by a need to create reliable revenue streams. One of the most crucial aspects of social media is the profitability factor. In the 1990s, theGlobe.com was one of the promising new startups, but almost as quickly, it went under due to lack of funds. The lesson of theGlobe.com has not gone unheeded by today’s social media services. For example, Twitter has sold access to its content to Google and Microsoft to make users’ tweets searchable for $25 million.

Google’s Buzz is one of the most interesting services in this respect, because Google’s main business is advertising—and it is a highly successful business. Google’s search algorithms allow it to target advertising to a user’s specific tastes. As Google enters the social media world, its advertising capabilities will only be compounded as users reveal more information about themselves via Buzz. Although it does not seem that users choose their social media services based on how the services generate their revenue streams, the issue of privacy in social media is in large part an issue of how much information users are willing to share with advertisers. For example, using Google’s search engine, Buzz, Gmail, and Blogger give that single company an immense amount of information and a historically unsurpassed ability to market to specific groups. At this relatively early stage of the fledgling online social media business—both Twitter and Facebook only very recently turned a profit, so commerce has only recently come into play—it is impossible to say whether the commerce side of things will transform the way people use the services. If the uproar over Facebook’s Beacon is any lesson, however, the relationship between social media and advertising is ripe for controversy.

Social Media as a Tool for Social Change

The use of Facebook and Twitter in the recent political uprisings in the Middle East has brought to the fore the question whether social media can be an effective tool for social change.

On January 14, 2011, after month-long protests against fraud, economic crisis, and lack of political freedom, the Tunisian public ousted President Zine El Abidine Ben Ali. Soon after the Tunisian rebellion, the Egyptian public expelled President Hosni Mubarak, who had ruled the country for 30 years. Nearly immediately, other Middle Eastern countries such as Algeria, Libya, Yemen, and Bahrain also erupted against their oppressive governments in the hopes of obtaining political freedom.Grace Gamba, “Facebook Topples Governments in Middle East,” Brimstone Online, March 18, 2011, http://www.gshsbrimstone.com/news/2011/03/18/facebook-topples-governments-in-middle-east.

What is common among all these uprisings is the role played by social media. In nearly all of these countries, restrictions were imposed on the media and government resistance was brutally discouraged.Peter Beaumont, “Can Social Networking Overthrow a Government?” Morning Herald (Sydney), February 25, 2011, http://www.smh.com.au/technology/technology-news/can-social-networking-overthrow-a-government-20110225-1b7u6.html. This seems to have inspired the entire Middle East to organize online to rebel against tyrannical rule.Chris Taylor, “Why Not Call It a Facebook Revolution?” CNN, February 24, 2011, http://edition.cnn.com/2011/TECH/social.media/02/24/facebook.revolution. Protesters used social media not only to organize against their governments but also to share their struggles with the rest of the world.Gamba, “Facebook Topples Governments in Middle East.”

In Tunisia, protesters filled the streets by sharing information on Twitter.Taylor, “Why Not Call It a Facebook Revolution?” Egypt’s protests were organized on Facebook pages. Details of the demonstrations were circulated by both Facebook and Twitter. E-mail was used to distribute the activists’ guide to challenging the regime.Beaumont, “Can Social Networking Overthrow a Government?” Libyan dissenters too spread the word about their demonstrations similarly.Taylor, “Why Not Call It a Facebook Revolution?”

Owing to the role played by Twitter and Facebook in helping protesters organize and communicate with each other, many have termed these rebellions as “Twitter Revolutions”Evgeny Morozov, “How Much Did Social Media Contribute to Revolution in the Middle East?” Bookforum, April/May 2011, http://www.bookforum.com/inprint/018_01/7222 or “Facebook Revolutions”Eric Davis, “Social Media: A Force for Political Change in Egypt,” April 13, 2011, http://new-middle-east.blogspot.com/2011/04/social-media-force-for-political-change.html. and have credited social media for helping to bring down these regimes.Eleanor Beardsley, “Social Media Gets Credit for Tunisian Overthrow,” NPR, January 16, 2011, http://www.npr.org/2011/01/16/132975274/Social-Media-Gets-Credit-For-Tunisian-Overthrow.

During the unrest, social media outlets such as Facebook and Twitter helped protesters share information by communicating ideas continuously and instantaneously. Users took advantage of these unrestricted vehicles to share the most graphic details and images of the attacks on protesters, and to rally demonstrators.Beaumont, “Can Social Networking Overthrow a Government?” In other words, use of social media was about the ability to communicate across borders and barriers. It gave common people a voice and an opportunity to express their opinions.

Critics of social media, however, say that those calling the Middle East movements Facebook or Twitter revolutions are not giving credit where it is due.Alex Villarreal, “Social Media A Critical Tool for Middle East Protesters,” Voice of America, March 1, 2011, http://www.voanews.com/english/news/middle-east/Social-Media-a-Critical-Tool-for-Middle-East-Protesters-117202583.html It is true that social media provided vital assistance during the unrest in the Middle East. But technology alone could not have brought about the revolutions. The resolve of the people to bring about change was most important, and this fact should be recognized, say the critics.Taylor, “Why Not Call It a Facebook Revolution?”

Key Takeaways

- Social networking sites often encompass many aspects of other social media. For example, Facebook began as a collection of profile pictures with very little information, but soon expanded to include photo albums (like Flickr) and micro-blogging (like Twitter). Other sites, like MySpace, emphasize connections to music and customizable pages, catering to a younger demographic. LinkedIn specifically caters to a professional demographic by allowing only certain kinds of information that is professionally relevant.

- Blogs speed the flow of information around the Internet and provide a critical way for nonprofessionals with adequate time to investigate sources and news stories without the necessary platform of a well-known publication. On the other hand, they can lead to an “echo chamber” effect, where they simply repeat one another and add nothing new. Often, the analysis is wide ranging, but it can also be shallow and lack the depth and knowledge of good critical journalism.

- Facebook has been the leader in privacy-related controversy, with its seemingly constant issues with privacy settings. One of the critical things to keep in mind is that as more people become comfortable with more information out in the open, the “reasonable standard” of privacy is lowered. This affects even people who would rather keep more things private.

- Social networking allows marketers to reach consumers directly and to know more about each specific consumer than ever before. Search algorithms allow marketers to place advertisements in areas that get the most traffic from targeted consumers. Whereas putting an ad on TV reaches all demographics, online advertisements can now be targeted specifically to different groups.

Exercises

- Draw a Venn diagram of two social networking sites mentioned in this chapter. Sign up for both of them (if you’re not signed up already) and make a list of their features and their interfaces. How do they differ? How are they the same?

- Write a few sentences about how a marketer might use these tools to reach different demographics.

11.3 The Effects of the Internet and Globalization on Popular Culture and Interpersonal Communication

Learning Objectives

- Describe the effects of globalization on culture.

- Identify the possible effects of news migrating to the Internet.

- Define the Internet paradox.

It’s in the name: World Wide Web. The Internet has broken down communication barriers between cultures in a way that could only be dreamed of in earlier generations. Now, almost any news service across the globe can be accessed on the Internet and, with the various translation services available (like Babelfish and Google Translate), be relatively understandable. In addition to the spread of American culture throughout the world, smaller countries are now able to cheaply export culture, news, entertainment, and even propaganda.